Edge AI for Mission-Critical HMI

Extending the WING Ecosystem for Real-Time, Privacy-First Mobility

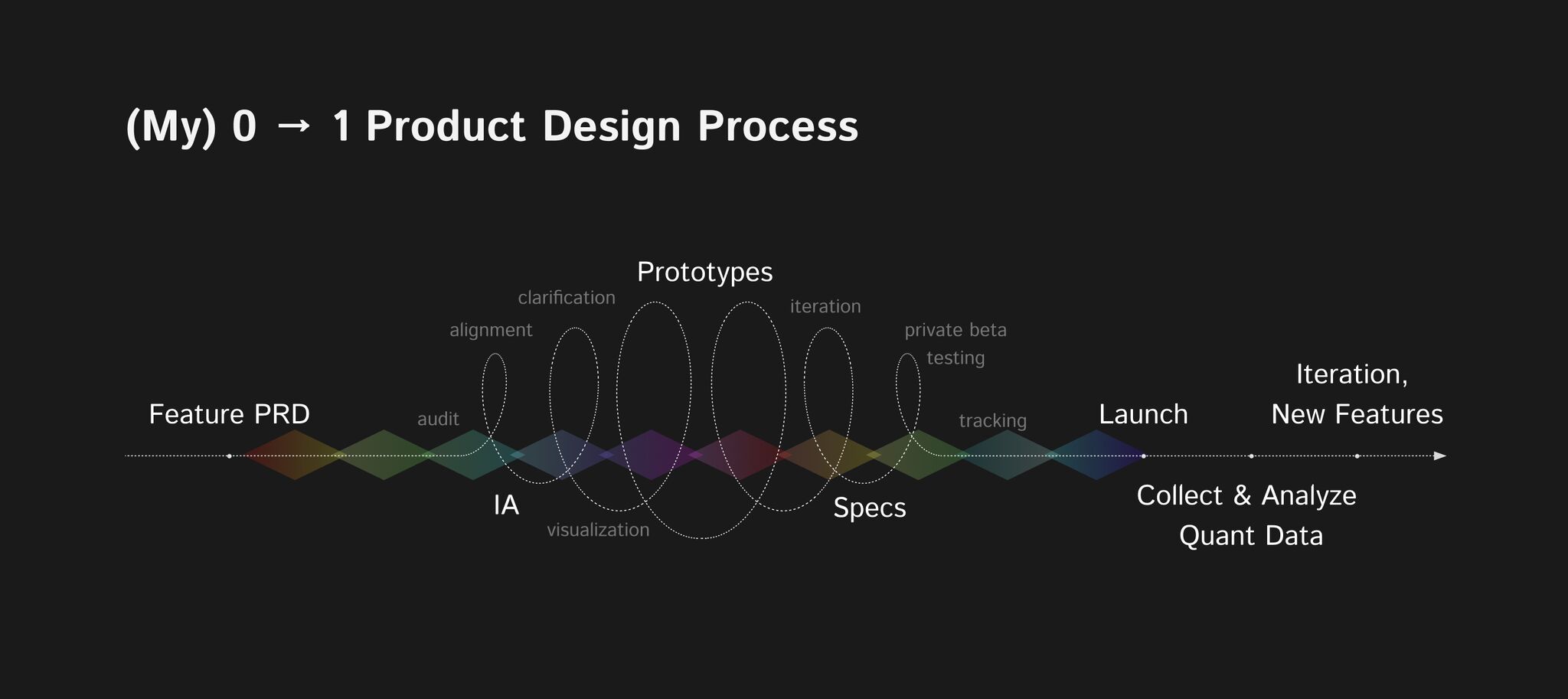

Role

UX/UI Designer

Duration

3 months

Skills

HAII & Systems Thinking, HCD

Overview

This project extends the WING HMI ecosystem, originally designed to centralize vehicle control into a streamlined, driver-first platform. With the rise of embedded AI in mobility systems, the next evolution the WING Intelligence Platform focuses on enabling real-time, privacy-respecting AI at the edge, right inside the vehicle.

Our goal: Eliminate cloud dependency for mission-critical decisions and give users full control over their data and AI behavior.

Problem

Smart vehicles are increasingly AI-powered, offering predictive navigation, automated alerts, and conversational assistance. However, these systems rely heavily on cloud computation, causing latency for split-second decisions. Worse, users lack visibility or control over how their driving data is collected or processed eroding trust.

The challenge: How might we design a real-time HMI system powered by edge AI that prioritizes both performance and user data sovereignty?

Proposed Solution

Research Approach: AI-Assisted Synthesis

As this was a conceptual project, we applied a synthetic research model using AI-assisted generative research a method often referred to as computational ethnography.

Instead of conducting direct interviews, we used AI tools to extract and synthesize user sentiment and behavioral patterns from:

Automotive user forums (Tesla, Comma.ai, Rivian)

Product reviews of vehicle infotainment systems

Reddit and Twitter discussions on privacy and latency

Driver safety reports and UX studies in telematics

HMI blogs

From this synthesis, we identified three recurring drivers of user dissatisfaction

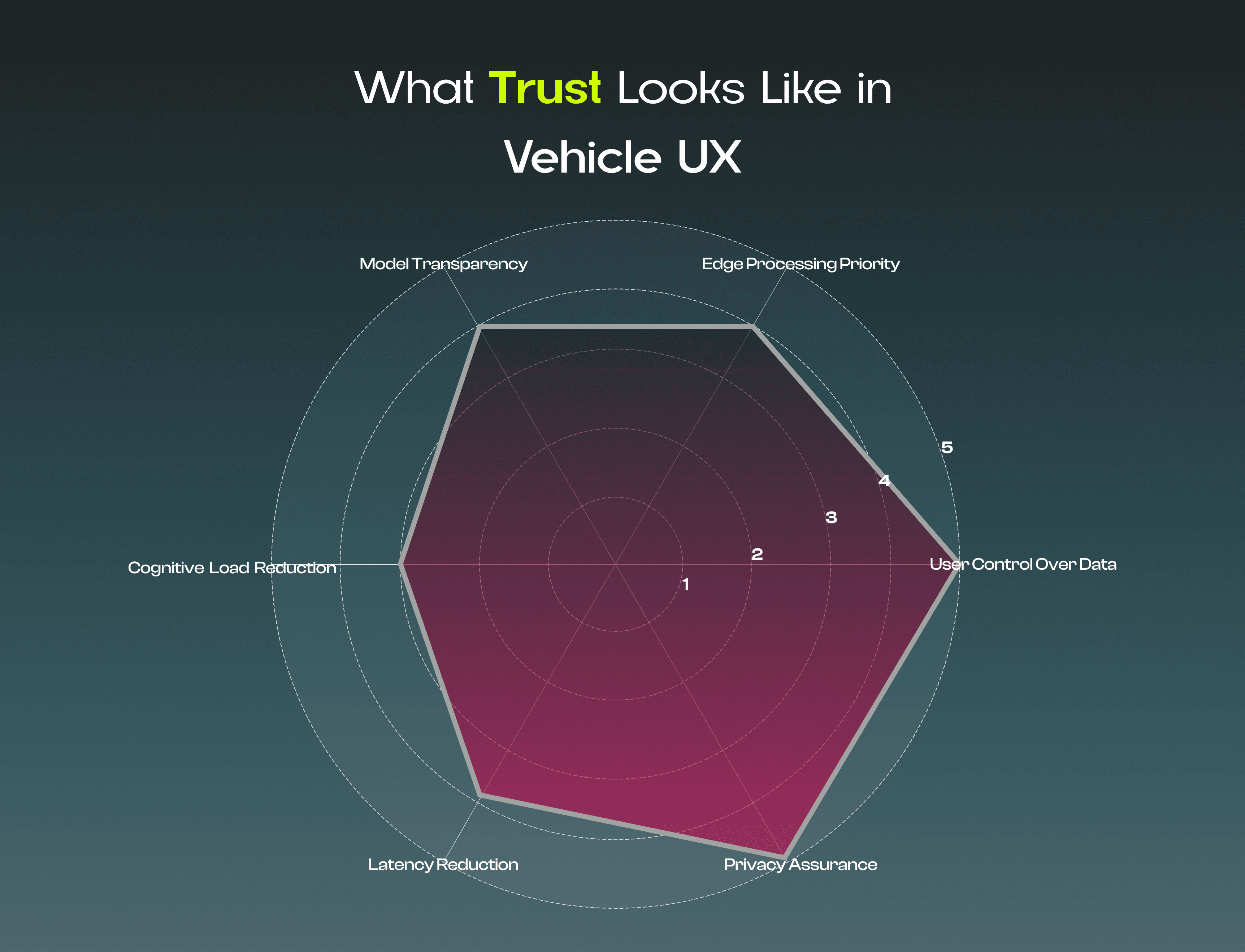

Design Principles

These insights shaped our core product principles used to guide both system architecture and interface behaviors:

Transparency

Clearly communicate how AI decisions are made and what data is used.Control

Give users intuitive toggles to manage AI training, cloud syncing, and data usage.Efficiency

Ensure interfaces reduce scan time and cognitive effort — especially while driving.

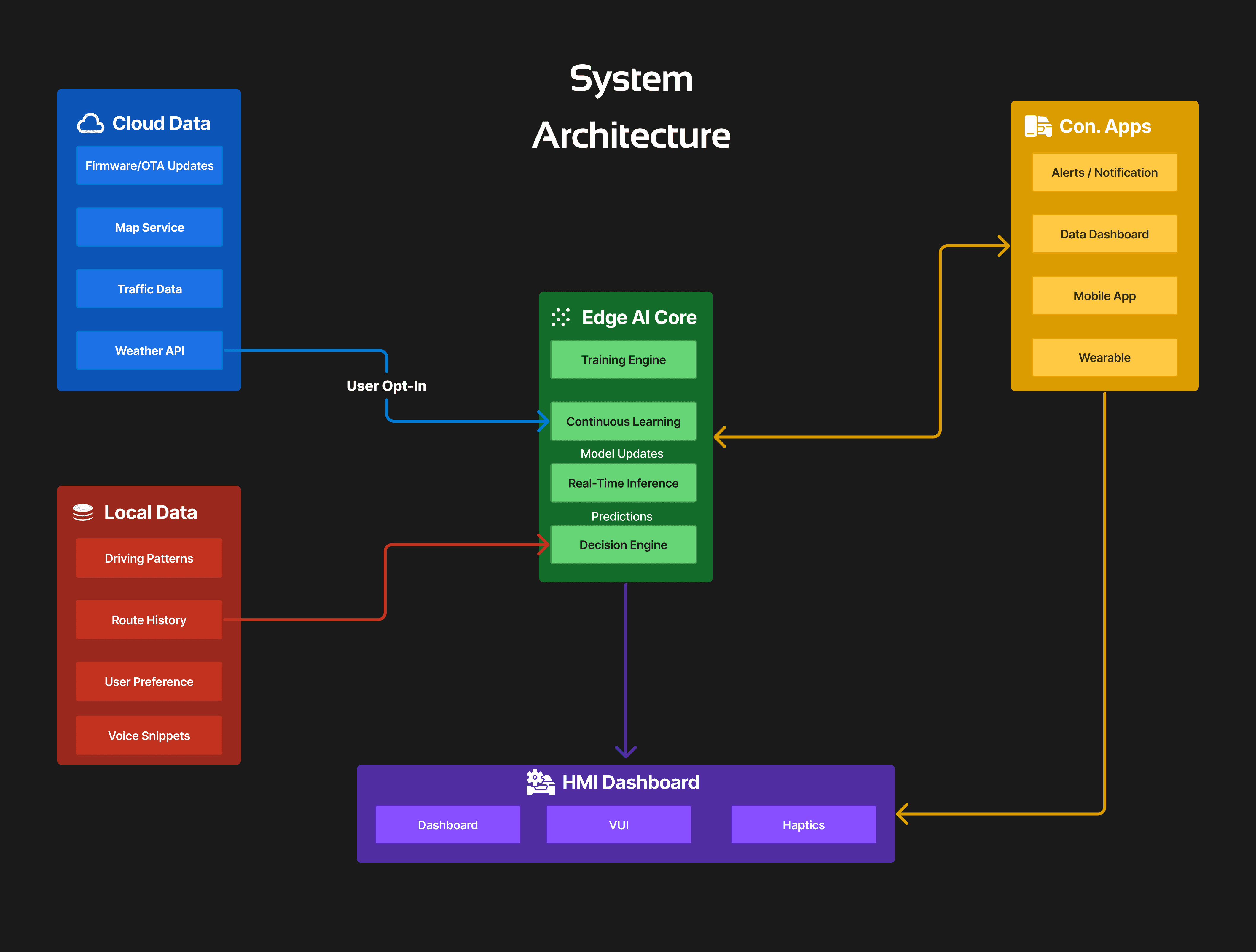

Core Architectural Principle:

The WING Intelligence Platform is designed as a layered HMI system that distinguishes between:

Sensitive, Locally Trained Data

(e.g., driving behavior, routes, voice interactions)Generalized, Cloud-Synced Data

(e.g., firmware updates, public map data)

By embedding edge AI inference directly into the vehicle’s onboard system, the platform guarantees real-time responsiveness while retaining privacy with all training, inference, and decision-making happening locally unless explicitly opted into cloud features.

The system is designed to sync with companion mobile and wearable apps for zero-latency alerts, haptic feedback, and data review outside the vehicle.

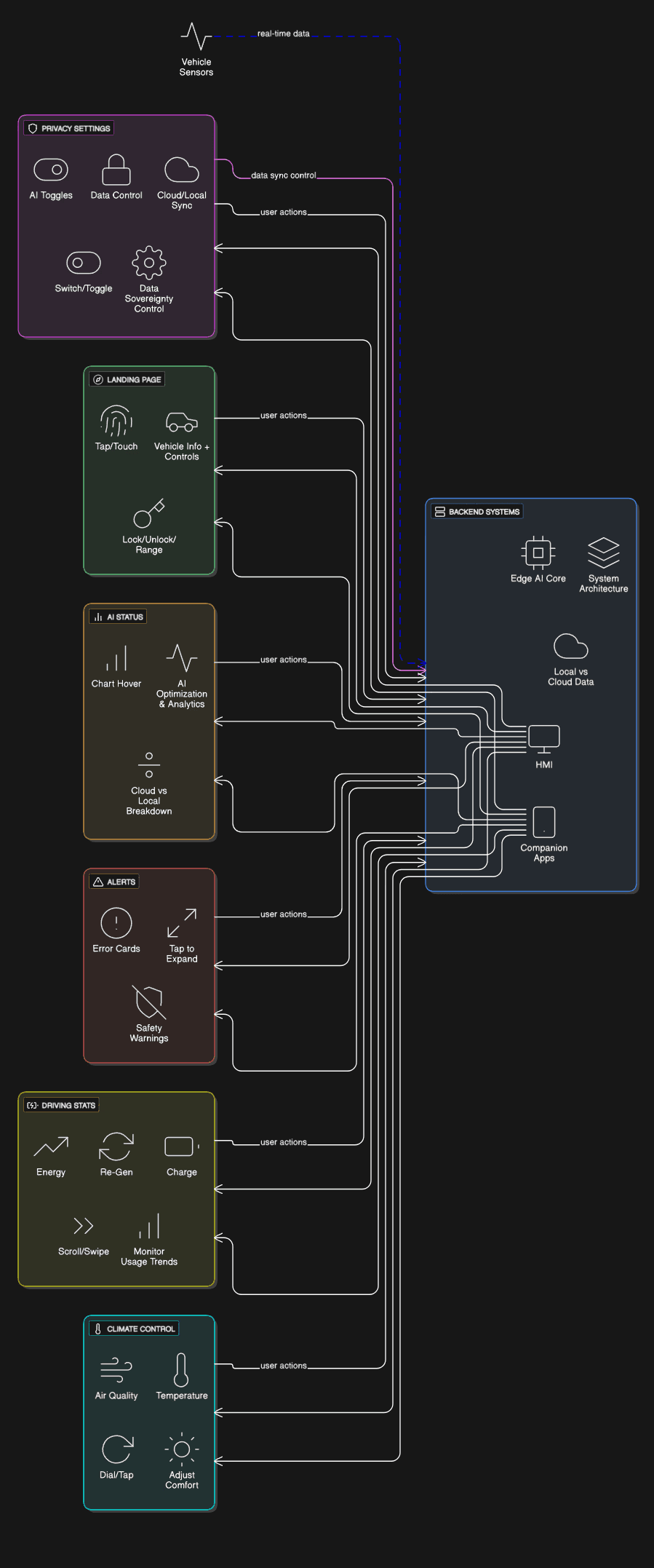

Core User Flows

Target Outcomes

Although conceptual, we designed the platform with measurable success criteria in mind:

Metric | Why It Matters | Target |

|---|---|---|

Visual Scan Time | Measures how quickly drivers process info | ↓ 30% vs legacy systems |

Latency (Edge vs Cloud) | Quantifies real-time AI response | Edge < 20ms |

AI Trust Index | Perceived understanding + control | > 80% confidence |

Cloud Opt-Out Rate | % of users keeping data local | > 65% sustained |

Alert Response Time | Speed of decision-making from prompt | ↓ 25% |

Roadmap

Phase | Focus | Status |

|---|---|---|

✅ Phase 1 | Conceptual architecture + problem framing | Complete |

✅ Phase 2 | High-fidelity prototyping | Complete |

🔄 Phase 3 | Companion Watch App (haptics, voice alerts) | In Progress |

🔜 Phase 4 | Pilot with OEM partner | Planned |

Iteration & Learnings

Challenge | Iterative Decision |

|---|---|

Overwhelming settings UI | Introduced progressive disclosure |

Technical AI explanations caused confusion | Added simple model descriptions + visual cues |

Static alerts often ignored | Shifted to passive cards + optional haptic feedback via Watch app |

Conclusion

The WING Intelligence Platform redefines how we approach AI in vehicles not as a black-box feature, but as a transparent, user-governed assistant. By embedding real-time, privacy-first AI directly at the edge, this platform balances trust, performance, and autonomy without compromise.

This case study extends the WING HMI ecosystem into the era of embedded intelligence setting the foundation for future automotive experiences that are as respectful as they are responsive.